Testing Assumptions

Assumptions vs Questions

Questions are normally items that we have identified which we don’t know the answer to and usually arise from curiosity and an enquiring mindset. There is an objectivity about the question, in that, we know we don’t know the answer and need to get more information through data or asking others.

Assumptions by contrast tend to be a projection of our understanding of what the solution should be and are usually based upon intuition rather than on qualified data to solve the problem.

Assumptions tend to be unaware or unconscious and can be a reaction to solve a problem quickly based upon our existing understanding, experience or intuition. However, consciously and purposefully raising an assumption that can then be tested is a useful technique to gather data to prove or disprove the assumption and can be used to set the hypothesis and framing of experiments.

Deriving questions and assumptions to set Sprint Goals to be tested then provides an intent behind the work done in Sprints to position them to enrich the current understanding of the product or service with evidential data, feedback and new findings.

Sprints as Experiments

The easiest way to test questions or assumptions is to conduct an experiment, build something quickly and evaluate it to provide more concrete data or evidence to prove or disprove the question or assumption.

An approach to do this in a Scrum context is to craft a Sprint Goal to provide the reason, intent and the “why” behind the work for the Sprint, which in this case would be to answer a question for example. The Sprint Goal then becomes the hypothesis of the experiment to be tested, allowing for specific evidence to be collected and conclusions drawn.

Using Sprints in this way to conduct experiments can help to arrive at appropriate solutions in complex problem spaces, which may have not otherwise have been possible to comprehend through traditional requirements gathering or spec writing activities alone.

During Sprint Planning the Sprint Goal can be referenced to indicate what work is selected by the team to test the hypothesis during Topic 1, and then the Development Team can break this down in Topic 2 into how they are going to deliver the work in the Sprint and conduct the experiment.

This then sets up the Sprint to evaluate the assumption or question, with the Sprint Review used to inspect the resulting data and determine if the hypothesis holds true, and what are the next steps. This could be the next Sprint Goal for example that moves on to the next question or assumption, or a refined assumption based upon what was found during the Sprint Review.

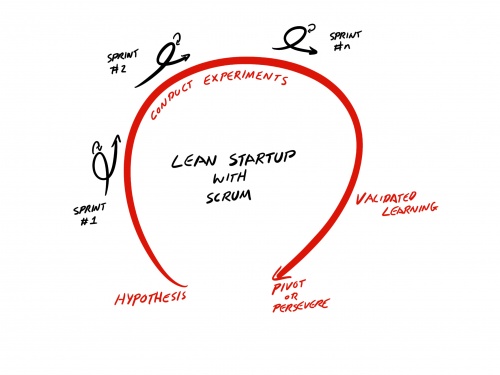

Lean Startup with Sprints

The Lean Startup movement[1] was initiated by Eric Ries and his book of the same name. The approach is much like using experiments, however, this approach conducts longer running experiments that may encompass a number of Sprints to build attributes to be tested and evaluated when used within a Scrum context.

The approach begins with a hypothesis or a question to be answered that are normally quite high level and challenging. The experiment then runs and may well include several Sprints as mini experiments to contribute to the overall hypothesis. Each Sprint will have their own Sprint Goals to position the Sprints, which may be sub parts of the overall hypothesis. Towards the end of the experiment validated learning is collected, which may be through the results of customer testing, surveys and other data that helps to prove or disprove the hypothesis.

Finally, the cycle ends with a decision to pivot and try a different hypothesis for the next experiment or persevere and continue to refine the hypothesis based upon the findings and observations. The cycle starts again with a new or refined hypothesis.

Applying this approach with a Scrum context allows for high level experiments to be conducted and leverages the Sprints as a means to conduct the experiment or to provide something that can be evaluated such a s prototype to be submitted for customer tested for example.

Simulations, Prototypes, Games

Working in complex problem spaces can be a little overwhelming if trying to visualise or put down possible solutions on paper. A much better way to engage much more of the brain power is to build prototypes, run simulations or devise games to model the search space. Seeing things in 3D, interacting with them in a physical sense and playing each other in a game setting can really help to bring the possibilities to light and enable context sensitive solutions to emerge from the model.

Building quick prototypes, such as paper prototypes for example, can help to illustrate new ideas and approaches. But have to be able to be constructed quickly and only show the concepts as opposed to final features. (The previous section on Customer Research provides more examples and approaches to prototyping.)

Divergence vs. Convergence

In a project or product development’s timeline the most common approach is to start with an idea and then focus on refining the idea down to a defined solution sometimes referred to as Convergent Thinking. This is good to exploit and refine ideas, make critical choices and converge the solution with respect to a release or delivery date.

In contrast there is also Divergent Thinking which focusses on exploring and searching in the solution space for possibilities. Solutions may be purposefully kept ambiguous in order to enable an appropriate idea to emerge from the perceived chaos and confusion.

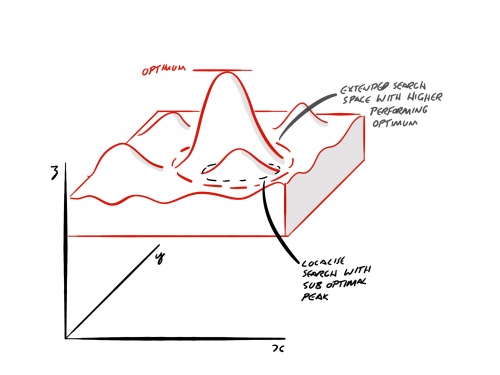

Min Max Problem aka “False Peaks”

This is a common problem when trying to reach an optimum within a localised search space, in that, if the search space is too narrow or sufficient searching is not done, then a higher performing optimum peak solution may have been missed.

When there is schedule pressure or a need to deliver quickly there can be a tendency to converge on a solution too early in order to make a release date or milestone. The overwhelming attraction to delivering overrides the ability to search and consider alternative solutions.

The challenge for the Product Owner is to hold their nerve, become comfortable with the ambiguity and use the uncertainty at the start of the timeline to their advantage by commissioning a range of experiments and prototypes searching the solution space for better possibilities. This might be in the form of submitting a range of features for customer testing or trying a number of prototypes to evaluate technical possibilities. Those features or prototypes that score highly then become the basis for converging and refining towards an end solution.

Jumping into Convergent Thinking too quickly and too early in the timeline can constrain the number of possibilities and limit the outcomes to only mediocre solutions. Hence, the Product Owner role should also focus on trying new ideas and experiments in the early Sprints in order to accommodate some Divergent Thinking, rather than just focussing on converging.

Bias

When empathising with a customer’s needs it is important to be aware of one’s own bias that may be influencing how concepts are being interpreted and new features devised. We all have our interpretation of the world around us and we can inadvertently influence or steer other people’s perspectives to match our own. This can often manifest as unconscious suggestions, critique or passing comments that filter thoughts, ideas and even the understanding of the data to match our own understanding.

Being consciously aware of the impact that we have on others and the end product can make the difference between a product being received well by customers and failed ideas that sounded great in the meeting room, but which then fall flat when released into the world due to a misalignment of customer needs and solutions.

Mental Models

Mental models are the unwritten rules that we have made to help us make sense of the world around us. They may be based upon our past experiences and what we understand to be true. Whilst allowing us to manage the huge amounts of information that we deal with on a day to day basis, mental models can also impede our creativity and sense of openness. We might criticise ideas that don’t align to our own set of values and logical understanding for example.

As a Product Owner it is important to recognise when your own mental models are at play and constraining the possibilities of what could be, and a conscious effort needs to be made to be more open instead.

As well as the Product Owner having mental models and somewhat rigid perspectives, consumers and customers also have their own mental models. When introducing a new feature or product it is worth remembering that customers may not see what you see and that their own experiences are influencing their openness to new ideas.

On the one hand customers may only perceive that they want more of what they already know, and discount new ideas that are counter to that experience. On the other, surprising customers with unexpected features that enhance or revolutionise the way they currently do things may be an excitement in the product or service that other providers have missed. Generally, humans are not linear or simple, or can be easily categorised. They are deeply complex, mysterious and independent beings.

Cognitive Bias

There are many different types of cognitive bias, generally it is the act of finding information to align and reaffirm our pre-defined conclusions.

Data Bias for example, will encourage individuals to review charts and graphs, filtering out everything except the information that supports their existing understanding. This can be quite frustrating when the data has been carefully curated to be objective evidence, and yet a subjective “spin” on the data is verbalised and retained.

The role of the Product Owner needs to be aware of their own cognitive bias and the cognitive bias of leaders and stakeholders, and try to draw attention to what the feedback or the data is actually telling them.

See Also

References

- http://theleanstartup.com/ accessed 4 July 2018